In my previous blog post I referenced that I planned to detail out how to extract and calculate CPU utilization from other sources including, DBA_HIST_SERVICE_STAT (example use case: service-level CPU utilization), DBA_HIST_SYSSTAT (various Oracle process level CPU statistics), and DBA_HIST_SYS_TIME_MODEL (various Oracle process level CPU statistics).

If you are looking for a very specific slice/granularity from Oracle performance metrics, you may find some difficulties using the standard out-of-the-box performance monitoring and analysis tools as they are designed to address a limited set of common questions. These tools often provide predefined reports, metrics, and visualizations that cater to general performance analysis needs. However, the realm of performance metrics is vast, and there is a multitude of possible questions and scenarios that may require deeper exploration. By experimenting with metrics and querying them in a clever and customized manner, you can unlock hidden insights and answer specific questions that go beyond the scope of standard tools.

Querying performance metrics, particularly CPU-related metrics, in a creative way plays a crucial role in analyzing application performance and making informed capacity planning decisions based on historical data. The process often involves exploring the multitude of metrics and statistics stored in the Automated Workload Repository (AWR), which are sometimes undocumented or inadequately documented. Delving into the hidden secrets of CPU utilization in Oracle databases requires a deeper understanding of these metrics.

Further, experimenting with metrics helps you gain a better understanding of the underlying system behavior and performance characteristics. It encourages a deeper exploration of the database's internals, providing insights into how different components, processes, and workloads interact and impact CPU utilization and thus, application performance.

Moreover, customized querying and analysis of metrics foster innovation and discovery. By going beyond the confines of predefined reports and tools, you have the opportunity to uncover novel insights, discover optimization opportunities, and potentially develop new performance analysis techniques or approaches (e.g. feature engineering, correlation analysis, regression analysis, anomaly detection, time-series analysis, aggregation/sumarization, …)

Again, by tapping into these hidden sources that need to be calculated, you can gain a more granular understanding of how your database is using CPU resources which can help with a variety of tasks such as workload relocation and maximizing performance.

In this post we’ll go through other sources of CPU information persisted in the database. The main reason I want to dive deeper is that Host CPU Utilization % is a system-level metric that does not provide insight into how CPU resources are being used by specific processes or users within a database. I’ve come across many use cases where clients are asking how much CPU is being used in a particular area. If you read my prior post, you saw how we can get very rough CPU utilization figures from DBA_HIST_ACTIVE_SESS_HISTORY (ASH). I would caution against using ASH as a definitive representation of CPU utilization primarily because every 10 rows from the v$ version of ASH (1 second samples) are rolled up into the history version of ASH, so at best it sort of represents 10 seconds of sample time. For a source where you can get CPU breakdown/granularity close to what you get in ASH (if the applications are using services) I look to DBA_HIST_SERVICE_STAT.

DBA_HIST_SERVICE_STAT

DBA_HIST_SERVICE_STAT (service stat) is a source where you can get CPU breakdown/granularity similar to what you get in ASH (if the applications are using services); service stat is at the service level rather than at the user level. With this, I can easily answer what is the CPU utilization for each service as the basic information is persisted in the stat name ‘DB CPU’. With this statistic, calculated to a well understood metric of percent CPU utilization, I can help clients inform service relocation or service rehosting decisions. Calculating percent CPU utilization is really quite simple and follows the same model as I used before:

sum(sec_on_cpu) / (minutes_in_snapshot_interval * seconds_per_minute * num_cpus * 100)

Really the only trick is knowing that the unit of measure for DB CPU is in microseconds (i.e. divide by 1,000,000 to get seconds). There is one other trick or thing to remember: the data is persisted cumulatively, so you can use the lag function to help you calculate the delta in DB CPU for an interval; also, by using the lag function you’ll miss calculating a value for the first snap_id unless you expand the selection.

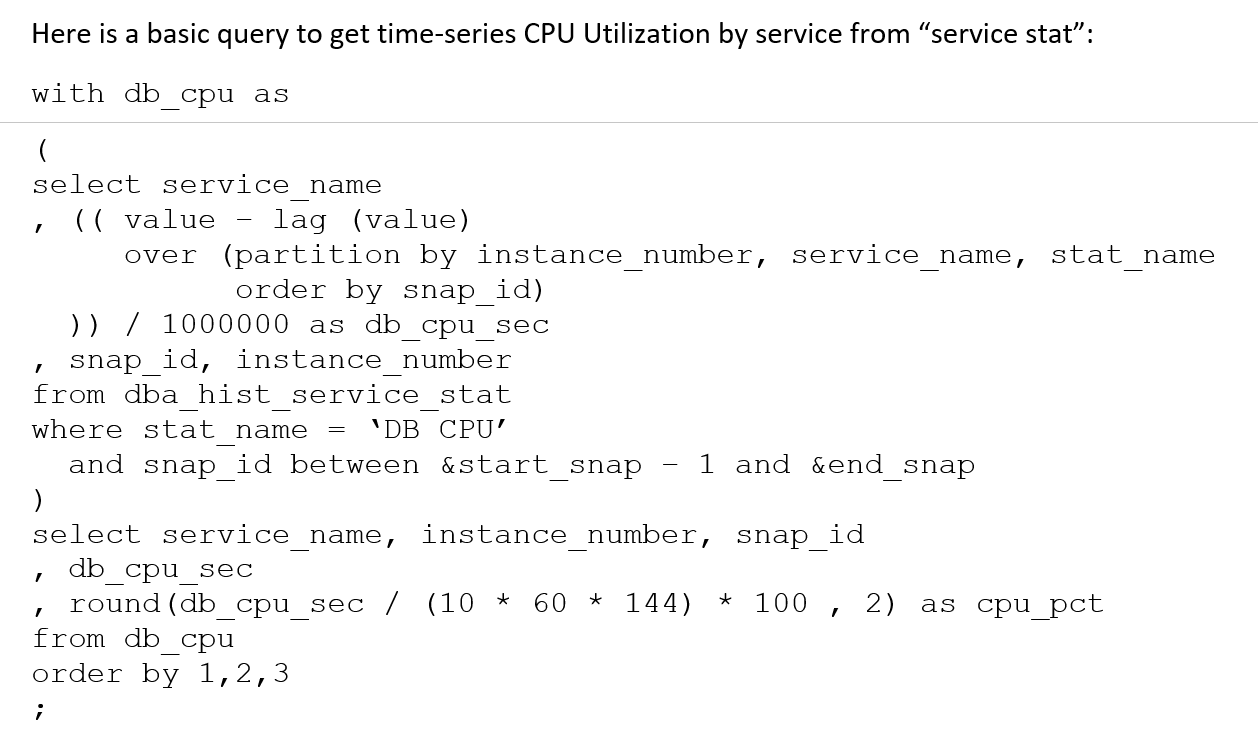

Here is a basic query to get time-series CPU Utilization by service from “service stat”:

with db_cpu as(select service_name, (( value – lag (value) over (partition by instance_number, service_name, stat_name order by snap_id) )) / 1000000 as db_cpu_sec, snap_id, instance_numberfrom dba_hist_service_statwhere stat_name = ‘DB CPU’ and snap_id between &start_snap – 1 and &end_snap)select service_name, instance_number, snap_id, db_cpu_sec, round(db_cpu_sec / (10 * 60 * 144) * 100 , 2) as cpu_pctfrom db_cpuorder by 1,2,3;

Note: the constants above are as follows:

- 10 is the number of minutes in my snapshot interval (use value for your snapshot interval).

- 60 is the number of seconds in a minute (use this unless you live on another planet 😉).

- 144 is the number of CPU’s on the machine (use the value for your CPU count).

It’s easy to forget that values persisted in the statistics, DB Time and DB CPU, don’t include the resources used by Oracle’s background processes, therefore, the overall figures you will see from service stat won’t add up to the values you’ll get from say, ‘Host CPU Utilization (%)’, from DBA_HIST_SYSMETRIC_SUMMARY.

DBA_HIST_SERVICE_STAT provides service-level values for 28 individual statistics and thus is a valuable source of information for understanding how services are consuming database resources. This view provides historical statistics that capture various aspects of service activity and resource usage over time; for example: aggregate wait times in the various wait classes; physical reads/writes; user calls, commits, rollbacks; DB Time, DB CPU; and many more.

By querying DBA_HIST_SERVICE_STAT, you can gain insights into the resource consumption patterns of different services in the database. The statistics recorded in this view can include metrics such as CPU usage, memory consumption, I/O activity, wait times, and other performance-related information.

Analyzing the data from DBA_HIST_SERVICE_STAT can help you:

1. Identify resource-intensive services: You can determine which services are consuming the most CPU, memory, or I/O resources, allowing you to prioritize performance optimization efforts.

2. Track service performance trends: By examining the historical statistics, you can identify patterns or trends in service behavior and resource utilization over time. This information can help in capacity planning and proactive performance management.

3. Investigate performance issues: If a particular service is experiencing performance problems, DBA_HIST_SERVICE_STAT can provide valuable data for root cause analysis. You can compare performance metrics before and after the occurrence of an issue to identify potential causes.

4. Optimize resource allocation: The statistics in DBA_HIST_SERVICE_STAT can help you fine-tune resource allocation for different services. By understanding their resource consumption patterns, you can allocate CPU, memory, and I/O resources more effectively to ensure optimal performance.

Overall, DBA_HIST_SERVICE_STAT offers a wealth of historical information that enables you to analyze and optimize how services are utilizing database resources. It empowers database administrators to make informed decisions, improve performance, and ensure efficient resource utilization across different services in the Oracle database.

DBA_HIST_SYSSTAT

DBA_HIST_SYSSTAT (sysstat) exposes a historic accounting of 2042 (in 19c) different Oracle process-level or function-level statistics. Likely you will not get to dive deep into most of these, but I highly recommend exploring these metrics as performance indicators.

The DB CPU equivalent statistic in the DBA_HIST_SYSSTAT view is “CPU used by this session”. In a way similar to DBA_HIST_SERVICE_STAT, this statistic can be used to calculate a CPU % at the system level. Don’t forget that this statistic does not include background CPU usage.

It’s really quite simple to compute the CPU % from this statistic and it follows the same model as we used for service stat:

sum(sec_on_cpu) / (minutes_in_snapshot_interval * seconds_per_minute * num_cpus * 100)

Don’t forget that the unit of measure for “CPU used by this session” is in centi-seconds (so, simply divide by 100 to get seconds). As with service stat the data in sysstat is persisted cumulatively, so you can use the lag function to help you calculate the delta in “CPU used by this session” for an interval; again, by using the lag function you’ll miss calculating a value for the first snap_id unless you expand the selection.

Here is a basic query to get time-series CPU Utilization from “sysstat”:

with db_cpu as

(

select snap_id, instance_number

, (( value – lag (value)

over (partition by instance_number, stat_name

order by snap_id)

)) / 100 as db_cpu_sec

from dba_hist_sysstat

where stat_name = ‘CPU used by this session’

and snap_id between &start_snap – 1 and &end_snap

)

select instance_number, snap_id

, db_cpu_sec

, round(db_cpu_sec / (10 * 60 * 144) * 100 , 2) as cpu_pct

from db_cpu

order by 1,2

;

Note: the constants above are as follows:

- 10 is the number of minutes in my snapshot interval (use value for your snapshot interval).

- 60 is the number of seconds in a minute (use this unless you live on another planet 😉).

- 144 is the number of CPU’s on the machine (use the value for your CPU count).

In a comparative CPU analysis, you can get a better feel for how CPU is being used by referencing the DBA_HIST_SYSSTAT statistics: "parse time CPU," "recursive CPU usage," and "CPU used by this session". In this use case I’m interested in the proportion of CPU being consumed by parsing, recursive calls and everything else. For example, I have seen many cases when recursive CPU goes way up and accounts for the bulk of the CPU usage. Recursive operations include activities like dictionary queries, constraint checks, and query transformations. An uptick in recursive CPU usage can be caused by complex queries and recursive SQL, dictionary operations, extensive query transformations, dynamic SQL and cursor management, SQL statement recompilation, and inefficient application design or coding practices. To address this, optimize SQL statements, minimize dynamic SQL usage, improve application design, and ensure efficient system configurations and resource monitoring.

The above use case is pretty much the only use case I’ve used recently for CPU metrics from DBA_HIST_SYSSTAT, but with over 2000 metrics in sysstat, there is a lot more to explore and gain insights from. For example, ‘table fetch continue row’ is an indicator of row chaining and perhaps fragmented blocks. You can run the Segment Tuning Advisor to find out which objects need to be reorganized.

I regularly use sysstat in my anomaly detection code to flag anomalous metrics in a bad time interval compared to a good time interval. In a recent performance case, many SQL had high values for the wait event “enq: TX – index contention”, with ASH we can find out the objects that are affected, but we can also get some additional information from DBA_HIST_SYSSTAT regarding internal workings of the Oracle processes. In this same example, I also saw high values for metrics such as “index crx state invalidation”, “recursive aborts on index block reclamation”, “failed probes on index block reclamation”, “index crx upgrade (positioned)”, “index split cancel op set”, and “index reclamation/extension switch”. So, obviously, this indicates that there is contention among transactions trying to modify the same index concurrently, leading to the delays/waits.

The sysstat statistics flagged in the anomaly detection modeling provide additional insights into the internal workings and consequences of the particular index contention issue:

"Index crx state invalidation": This statistic represents the number of times the consistent read (CR) mode of accessing an index is invalidated due to contention. It indicates that transactions attempting consistent reads on the index were affected by the contention and had to be invalidated.

"Recursive aborts on index block reclamation"*: This statistic counts the number of times recursive operations, specifically aborts, occurred during index block reclamation. Recursive operations are internal actions performed by Oracle to maintain data integrity and consistency. High values suggest that the index block reclamation process was interrupted or experienced difficulties due to contention.

* Index block reclamation refers to the process of reclaiming or freeing up space within an index block in an Oracle database. When an index block becomes full due to insertions, updates, or deletions, it needs to be divided or split into multiple blocks to accommodate additional data. Index block reclamation involves rearranging and redistributing the data within the index block to optimize space utilization and ensure efficient access to index entries. The goal of index block reclamation is to maintain the index's performance and prevent excessive block fragmentation, which can degrade query performance.

"Failed probes on index block reclamation": This statistic indicates the number of unsuccessful attempts to probe or locate specific information during index block reclamation. It suggests that contention might have hindered the probing process, resulting in failed attempts.

"Index crx upgrade (positioned)": This statistic tracks the number of times an index is upgraded from the consistent read (CR) mode to the current mode (positioned). It signifies that transactions accessing the index encountered contention, leading to the need for an upgrade to the current mode for proper access.

"Index split cancel op set": This statistic represents the number of times a split operation on an index block was canceled due to contention. Index splits occur when a block becomes full, and it needs to be divided into two blocks. High values indicate that the splitting process was interrupted or abandoned due to contention.

"Index reclamation/extension switch": This statistic counts the number of switches between index reclamation and extension operations due to contention. It indicates that contention affected the normal process of reclaiming or extending index blocks, requiring switches between these operations.

In summary, the observation of SQL statements waiting on "enq: TX - index contention" and the associated anomalous statistics from sysstat reveal additional details on the impact and consequences of contention among transactions accessing the same index. The metrics flagged by the anomaly detection modeling and subsequent analysis provides a valuable learning experience and an opportunity for experimentation to understand the underlying causes and effects of the contention. By analyzing these statistics, putting on your thinking cap and delving into the associated learning and experimentation, it becomes possible to get a much more granular view into the extent and nature of the contention issue. The knowledge gained by such anomaly modeling will serve to expand your capability for troubleshooting and optimizing the affected SQL statements and index structures, (and hopefully) leading to improved performance and overall database efficiency. Reference book regarding the anomaly detection: Dynamic Oracle Performance Analytics - Using Normalized Metrics to Improve Database Speed http://www.apress.com/9781484241363 .

Sidebar note on DB CPU and DB Time

DB Time and DB CPU are two important performance metrics in Oracle databases that can provide insights into how the database is utilizing CPU resources. Here's a brief comparison/contrast of the two metrics:

DB Time: This metric represents the total amount of time spent by user processes in the database, including both CPU time and non-CPU wait time (such as I/O wait time, network latency, etc.). DB Time can be used to identify performance bottlenecks and quantify the overall efficiency of the database. It is a useful metric for identifying areas where tuning or optimization may be needed to reduce wait time and improve overall performance.

DB CPU: This metric represents the amount of CPU time used by user processes in the database. It does not include non-CPU wait time, so it provides a more focused view of CPU utilization in the database. DB CPU can be used to identify specific SQL statements, PL/SQL code, or other database components that are consuming excessive CPU resources. This information can be used to optimize these components and reduce overall CPU usage, improving database performance.

In terms of their implementation, both metrics can be found in several places in Oracle databases, including dba_hist_sys_time_model, dba_hist_service_stat, and dba_hist_sysstat [DB CPU is under CPU used by this session in sysstat]. However, the specific details and calculations for each metric may vary depending on the context in which they are used.

Overall, DB Time and DB CPU are both useful performance metrics in Oracle databases, providing different types of insights into how CPU resources are being utilized. DB Time provides a more holistic view of performance, while DB CPU provides a more focused view of CPU usage. Together, these metrics can help database administrators optimize resource usage and improve overall database performance.